Nvidia has unveiled Rubin, a next-generation GPU architecture and tightly integrated AI computing platform that is set to replace the US chip giant’s current flagship Blackwell architecture.

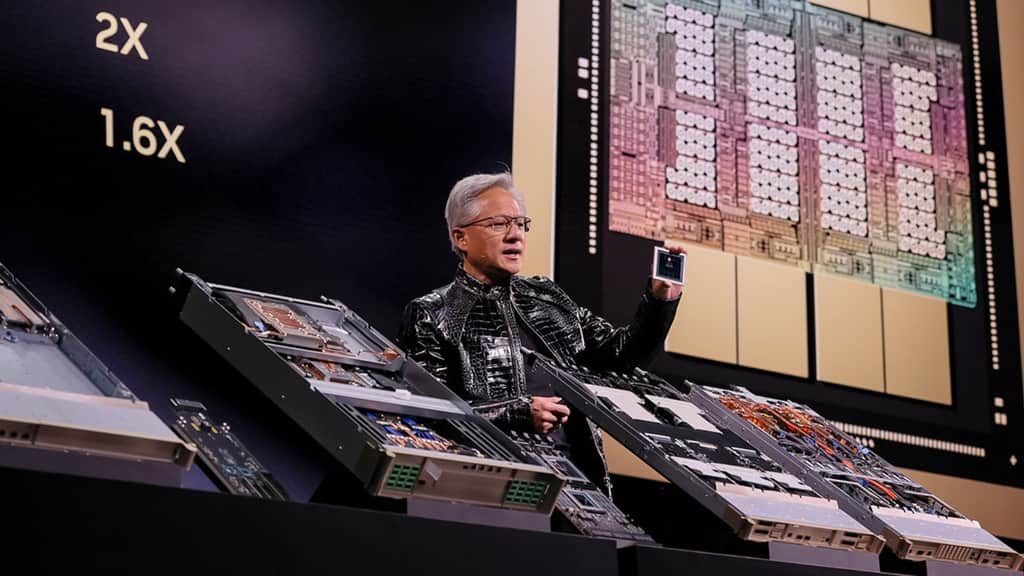

Rubin is Nvidia’s first extreme-codesigned platform and will comprise six AI chips, alongside various networking technologies and system software, all working together as a single computing unit. It was launched by Nvidia founder and CEO Jensen Huang onstage at CES 2026 in Las Vegas, United States on Monday, January 5. Rubin computing units are already in full production, with products and services powered by these units expected to launch in the second half of 2026.

In his roughly two hour-long keynote speech, Huang said that AI is scaling into every domain and every device. With Rubin, NVIDIA aims to “push AI to the next frontier” while slashing the cost of generating tokens to roughly one-tenth that of the previous platform, making large-scale AI far more economical to deploy, he further said.

Nvidia on Monday also announced its latest series of open-weight AI reasoning models called Alpamayo that is designed specifically for autonomous vehicles like self-driving cars.

The launch of Rubin comes months after Nvidia reported record high data centre revenue, up 66 per cent over the prior year. This growth has been attributed to an increase in demand for Blackwell and Blackwell Ultra GPUs. They have served as a key indicator in whether the AI boom is sustainable or turning into a bubble. The runaway success of Blackwell and chips based on this architecture have set a high bar both in performance and market demand for the newly unveiled Rubin platform.

“Computing has been fundamentally reshaped as a result of accelerated computing, as a result of artificial intelligence. What that means is some $10 trillion or so of the last decade of computing is now being modernized to this new way of doing computing,” Huang said in his keynote speech.

“The faster you train AI models, the faster you can get the next frontier out to the world. This is your time to market. This is technology leadership,” he added. Emphasising Nvidia’s efforts to roll out open-weight AI models across domains, Huang said that they have formed a global ecosystem of intelligence that developers and enterprises can build on.

“Every single six months, a new model is emerging, and these models are getting smarter and smarter. Because of that, you could see the number of downloads has exploded,” he further said.

Nvidia’s next-generation computing platform has been named after Vera Rubin, an American astronomer known for her research on galaxy rotation rates which served as evidence that dark matter exists.

Rubin has been described as an AI supercomputer made up of six chips. The components that are part of the computing platform are: All these components have been designed together (extreme codesign) which is important because scaling AI to gigascale requires tightly integrated innovation across chips, trays, racks, networking, storage and software to eliminate bottlenecks and dramatically reduce the costs of training and inference, Huang said.

Rubin supports third-generation confidential computing and will be the first rack-scale trusted computing platform, as per the company.

In terms of performance, Nvidia claimed that Rubin GPUs were capable of delivering five times as much AI training compute as Blackwell. Overall, the Rubin architecture can be used to train a large ‘mixture-of-experts (MoE)’ AI model in the same amount of time as Blackwell while using a quarter of the GPUs and at one-seventh the token cost.

Nvidia also launched an Inference Context Memory Storage platform for AI-native storage. It includes an AI‑native KV‑cache layer that boosts long‑context inference with 5x higher token throughput, better performance per dollar of total cost of ownership (TCO), and five times better power efficiency.

Editorial Context & Insight

Original analysis & verification

Methodology

This article includes original analysis and synthesis from our editorial team, cross-referenced with primary sources to ensure depth and accuracy.

Primary Source

The Indian Express